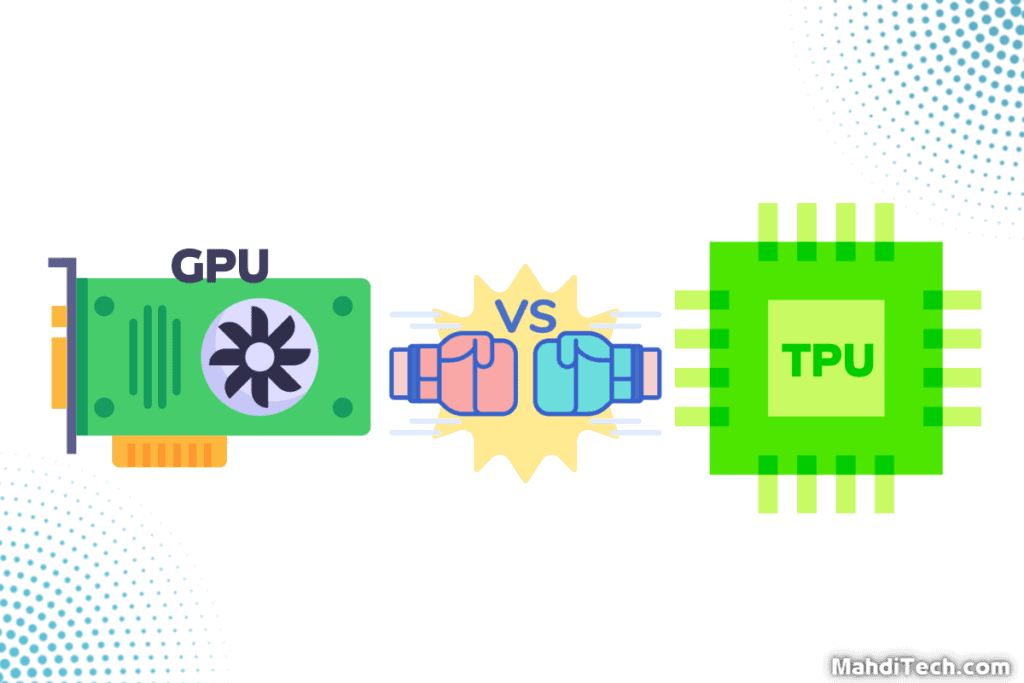

Ever found yourself questioning the difference between a TPU and GPU, particularly when configuring deep learning models?

You’re not alone. The TPU vs GPU debate is standard, fraught with technical jargon and complex specifications. But worry not;

This comprehensive guide aims to simplify the complex. In the following sections, we’ll explore the fundamental differences between TPUs and GPUs, assessing their unique performances and discussing their pros and cons.

Our goal? To arm you with the knowledge you need to make an informed decision perfectly tailored to your needs in 2023. This isn’t just another tech article. It’s your path to understanding and making the most of these powerful computing options.

So!

Quick Summary

GPUs and TPUs are designed for different tasks.

GPUs are versatile, performing well in graphics rendering and varied computations, but consume considerable power.

TPUs are purpose-built for machine learning tasks, efficiently handling high-volume, low-precision calculations with less energy.

Choosing between them depends on your specific computational requirements and energy efficiency needs.

What is a GPU?

A GPU, or Graphical Processing Unit, is a specialized hardware designed to render images, animations, and videos for a computer’s screen.

Initially, these application-specific integrated circuits were primarily used for gaming and graphics-heavy applications. However, in the ongoing TPU vs GPU discussion, we see GPUs have found a new purpose.

They’ve become handy for running machine learning models because they can perform many calculations simultaneously.

This ability speeds up computations and makes them efficient, giving GPUs a significant role in today’s technology landscape.

How Does a GPU Work?

At its core, a GPU is a testament to the power of parallel processing.

Unlike traditional CPUs that handle a few software threads at a time, a GPU is designed to handle hundreds—sometimes thousands—of lines simultaneously. This capability is a game-changer in operations like rendering images or running AI learning algorithms.

The workings of a GPU are centered around executing basic arithmetic operations—but lots of them all at once. A GPU can process complex graphics and datasets swiftly and efficiently by splitting large computational tasks into smaller ones and executing them concurrently.

This capacity for parallel processing has made GPUs a valuable resource for creating stunning visual experiences and powering machine learning and AI applications.

What is a TPU?

A TPU, or Tensor Processing Unit, is a type of processor explicitly designed to accelerate machine learning workloads.

Google’s TPU was built from the ground up with a focus on machine learning and the unique requirements of these workloads. The TPU, unlike traditional CPUs (central processing unit) and GPUs, is optimized for the execution of a specific set of tasks—ones related to the training and inference of neural network workloads.

A TPU operates by swiftly performing a high volume of low-precision calculations often found in machine learning tasks. It harnesses the power of a unique architecture to manage complex matrix operations at the heart of neural networks.

This allows TPUs to deliver superior performance for the demanding computations required in machine learning applications. This specialization has made TPUs a go-to for many developers and data scientists running their machine learning workloads.

How Does a TPU Work?

In operation, Tensor Processing Units are specifically engineered to manage the requirements of machine learning tasks. The cornerstone of a TPU’s functionality is its ability to handle many matrix operations simultaneously—a necessity for deep learning algorithms.

Instead of focusing on rendering a graphical interface like GPUs, TPUs dedicate their resources to rapidly processing the high volumes of lower-precision computations found in deep learning workloads.

This ability to concentrate on a specific type of computation means that a TPU can work efficiently and swiftly, making it an attractive choice for developers and scientists working on complex machine-learning programs.

However, it’s important to note that, unlike a prebuilt PC, TPUs require a specific setup and coding approach to leverage their capabilities thoroughly.

Key Differences Between GPU and TPU

In this section, we’ll highlight three key areas of distinction between a GPU and a TPU: their architectural differences, processing capabilities, and specific use cases and applications.

These aspects underline the unique strengths and weaknesses of each technology.

Architectural Differences

Architecturally, a Graphics Processing Unit (GPU) and a Tensor Processing Unit (TPU) are designed with different objectives.

A GPU, traditionally used for graphics rendering, features a design that can manage various tasks. This versatility makes GPUs a popular choice not only in gaming but also in fields like data science.

On the other hand, a TPU is purpose-built for Artificial intelligence learning tasks, with an architecture focused on performing a high volume of simple calculations at high speed.

This specialized design can improve efficiency in terms of thermal design power, given the workload-specific optimization.

Processing Capabilities

Regarding processing capabilities, the differences between GPUs and Tensor Processing Units (TPUs) become more apparent. With its robust, flexible design, the former excels at various tasks, including complex mathematical computations required in multiple applications. Yet, it can consume considerable power in the process.

TPUs, however, shine brightly when tasked with deep learning workloads, especially those involving neural network inference. They are tailored to handle many low-precision calculations quickly and efficiently.

As a result, TPUs can deliver remarkable processing power while typically using less energy. This makes them an attractive choice for machine learning tasks where power consumption and efficiency are paramount considerations.

Use Cases and Applications

The choice between GPUs and TPUs often depends on the use case. With their excellent graphical performance, GPUs have traditionally been used in applications like video rendering, gaming, and even powering quad-core PC CPUs.

However, they’ve also found utility in solving complex problems in computer programs and artificial intelligence.

On the contrary, TPUs, initially designed for use in Google data centers, are optimized for specific workloads involving neural network training and inference.

Their ability to swiftly handle large volumes of low-precision calculations makes them especially well-suited for complex Automated learning tasks. This specialized focus of TPUs can make them an excellent choice when dealing with heavy machine-learning tasks.

Pros and Cons of GPUs

Pros of GPUs:

Cons of GPUs:

Pros and Cons of TPUs

Pros of TPUs:

Cons of TPUs:

TPU vs GPU: Which is Better?

The choice between a GPU and a TPU largely depends on the use case.

A Tensor Processing Unit might be better for deep learning and training models, especially those involving high-volume, low-precision calculations. It’s designed for such tasks and can deliver superior performance, often at a lower training cost.

On the other hand, if the goal is to run a wide array of tasks, including graphics-heavy computer programs, then a GPU might be a more versatile tool.

It’s like an additional processor that can handle many different computations efficiently. Ultimately, the ‘better’ choice hinges on the specific requirements of the task at hand.

Conclusion

In conclusion, both GPUs and TPUs offer unique advantages depending on the application.

GPUs, with their versatility and powerful computational abilities, excel in a wide range of tasks, including graphics rendering and general computations.

On the other hand, TPUs, specifically designed to handle machine learning workloads, offer impressive performance and efficiency in tasks involving high volumes of low-precision calculations.

The choice between the two ultimately depends on the task at hand. For deep learning and specific machine learning tasks, a TPU might be the better option. Conversely, for diverse computational needs including graphic-intensive tasks, a GPU might be more fitting.

Understanding these key differences will allow you to make an informed decision based on your specific computational needs.